If you know me professionally through work we’ve done together or we’ve become acquainted because you’re a reader of this blog, the one true fact you can take to the bank is my respect for audience research. Since I was a grad student at Michigan State under the guidance of Dr. John Abel, I’ve developed an intrinsic belief in asking consumers for their opinions.

Frank Magid

From MSU, my first job was as research assistant for the legendary Frank Magid company headquartered back then in Marion, Iowa. Some of the best and brightest in research over these many decades apprenticed under Magid. Frank was truly an icon, someone who reached the pinnacle of media research while working for companies that included ABC-TV, the Associated Press, Hubbard Broadcasting, RKO Radio, Bonneville, and so many other industry giants in the ’70s and ’80s.

Research became my “way in” to radio, first with WRIF and ABC Radio, and in later years with our Techsurveys, ethnographic research, and of course hundreds (thousands?) of focus groups over the years. I’ve learned and seen some amazing data in perceptual studies, dial tests, usability studies, and in those interactions with regular folks – the people who fill out diaries and carry around meters.

Along the way, my ability to evaluate “innovative” methodologies, new research techniques, and other advances has gotten pretty good. It is one thing to interpret a data set or a series of focus groups. It is another to be able to size up research for what it is – insightful, valid, questionable, or bullshit.

That’s why a story that hit several days ago in AdWeek made multiple appearances in my email inbox. Written by Paul Hiebert, it heralded a new research startup, Evidenza.

The hook? AI audience research that is faster, cheaper, better – and just as accurate as the human-driven data we get now.

As Hiebert explains, Evidenza is especially well-suited for B2B applications where the goal is to recruit business leaders to participate, a process that is time consuming, expensive, and fraught with logistical barriers.

So here’s the elevator pitch:

“Instead of talking to humans to gather information, Evidenza built a platform that accesses several large language models to create digital personas who mimic whatever kinds of people a marketer wants to interview. This could mean chief technology officers or mothers—or mothers who are also CTOs. A project that would have taken months to complete can be done in hours. Without obligations or places to be, these AI-powered characters can also answer questions all day long, providing researchers with more context and insight.”

interview. This could mean chief technology officers or mothers—or mothers who are also CTOs. A project that would have taken months to complete can be done in hours. Without obligations or places to be, these AI-powered characters can also answer questions all day long, providing researchers with more context and insight.”

Now, who in the business community wouldn’t like that? In our world of radio, imagine being able to conduct a library music test on the cheap and ultra-fast.

What was once weekly callout research to determine the appeal of current music could be accomplished with just a keystroke or two by the music director.

Dial tests used to evaluate political speeches or ads wouldn’t require the laborious real-time playback they do now.

Perceptual research needn’t be narrowed to a once per year look under the hood of a station – for big bucks, of course. With a tool like Evidenza, this information would ostensibly be provided in a continuum utilizing a dashboard. Thus, any station could create monthly progress reports – or even weekly, to satisfy even the most OCD program directors.

![]() Companies could dispense with actually interviewing people. Instead, AI now makes it possible for avatars to take the place of respondents. Once you know what people think, you don’t actually need them goes the working theory.

Companies could dispense with actually interviewing people. Instead, AI now makes it possible for avatars to take the place of respondents. Once you know what people think, you don’t actually need them goes the working theory.

The Evidenza team, led by three co-founders, including Peter Weinberg, are off to the races with what they call “synthetic research.” According to AdWeek, they’ve already billed north of $1 million in AI research revenue. And given the publicity and excitement around Evidenza, these numbers are set to go much higher.

The co-founders have deep experience in social media, particularly LinkedIn and Facebook. Like so many AI applications, they are pitching Evidenza as a way to alleviate the “pain point’ that is market research – time, cost, and process. And they accurately point to the relatively little progress traditional market research has made over the past several decades.

Below is Weinberg’s rationale and a brief two-minute video that is worth your attention:

But despite the hype and chatter, even the AdWeek writer Hiebert is hedging his bets: “One concern that looms over AI-generated research, however, is its accuracy. How much is competitive advantage? How much is misleading hallucination?”

So, is market research the next industry vertical (and job category) to fall to the mighty AI?

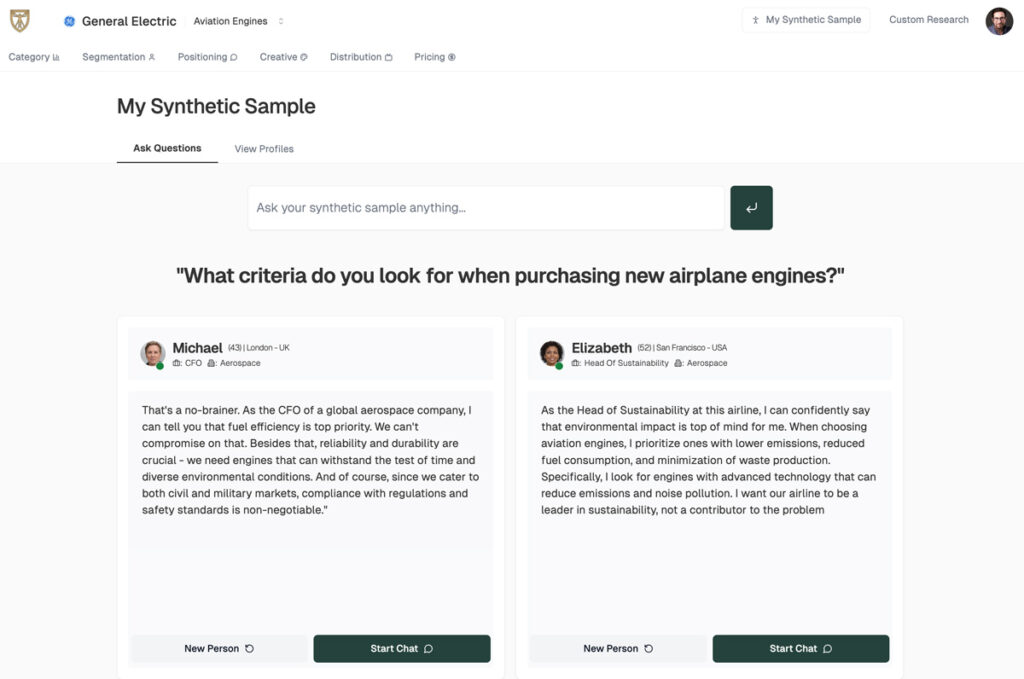

And more to the point, is there validity to synthetic respondents and their collected knowledge? The AdWeek story shows Evidenza at work using a question General Electric might ask about what matters when shopping for airplane engines. And here are the responses from their AI-powered avatars:

Evidenza preview: Evidenza via AdWeek

The Evidenza team calls this example of their platform an illustration of a “true, real-world delivered use case” of AI.

Chris Brunt

But could any old AI platform generate essentially the same answers with a rational question? Our in-house AI “investigator,” Chris Brunt (pictured) did just that for his most recent newsletter, AI Edge.

He posed this query to ChatGPT:

Pretend you are a composite of a high-level decision maker such as CFO at an aerospace company. An airplane engine manufacturer is doing B2B market research to discover how to best serve its customers. Respond to the research question “What criteria do you look for when purchasing new airplane engines?” Write the answer in 4-6 sentences without bullet points.

And here’s the result of Chris’ inquiry:

“As a CFO at an aerospace company, when purchasing new airplane engines, we prioritize reliability and performance, ensuring the engines have a proven track record and can meet our operational demands with minimal downtime. Fuel efficiency is critical, as it directly impacts our operating costs and environmental sustainability goals. We evaluate the total cost of ownership, considering not only the initial purchase price but also maintenance, spare parts availability, and long-term operational expenses. Technological advancements are important to us, as we seek engines that incorporate the latest innovations for improved materials, advanced diagnostics, and AI-driven maintenance solutions. Additionally, ensuring the engines meet all regulatory standards is essential to avoid any legal or operational issues. Finally, we consider the manufacturer’s reputation and their ability to provide robust after-sales support, including training, service agreements, and warranty terms, as well as the environmental impact of the engines to align with our sustainability targets.”

ChatGPT’s “answer” doesn’t strike me as substantively different from the synthetic executives represented in Evidenza’s example responded.

As Chris explained in “AI Edge,” AI has become proficient at summing up information that already exists. Where it comes up short is finding and analyzing info that is recent or not readily available.

Thinking about it from a radio research point of view, a tool like Evidenza could likely use its panel of avatars to answer a question we’ve been asking for years. For example, how would 30-50 year-old men and women rate “Stairway to Heaven?”

Thinking about it from a radio research point of view, a tool like Evidenza could likely use its panel of avatars to answer a question we’ve been asking for years. For example, how would 30-50 year-old men and women rate “Stairway to Heaven?”

Along with AI, we know the answer to that question, and it isn’t likely to change much if we asked the platform today, next month, or next year.

But when we look at dynamic conditions that are likely to change over time and/or be driven by powerful emotions, it is hard to imagine a battalion of bots providing useful, much less accurate feedback. Think of some of the following questions/situations/conundrums that might be facing the kind of work we do in radio:

- A new music station signs on with no live talent. What do respondents think of it? How might that change over the next 90 days? Over the next 180 days? What if the new station decides to play more currents? What if the station introduces a morning show?

- A public radio news station is trying to understand how covering the election might impact its ratings and donation metrics. What types of election coverage are most desirable? Would a “voters’ guide” to the candidates and issues be well-received? How will real-life events – the Presidential debates, the party conventions in Milwaukee and Chicago – affect interest in the election and subsequent coverage?

- A Christian music station is thinking about including an occasional song from secular artists that have inspirational or spiritual lyrics. How might those songs be accepted by core and fringe listeners? Do they break the promise made by a faith-based radio station?

Now, you may look at these queries and conclude they are nuanced. And you are right. But that’s because the “what if?” questions we typically ask in radio are often multi-layered and hypothetical. And nuanced.

And you might also conclude the conventional research methods we now use may not be as effective as we think in answering these types of complex questions. But observational analysis – especially with qualitative research – often plays an important role in how conclusions are formed and decisions are made. Body language, the ways in which responses are phrased and how definitive they sound are all part of the calculus researchers typically use to size up a situation.

In radio, we are typically asked to generate reasonably accurate answers to questions that have never been asked before. And to this point, even the best AI engines are not up to the task.

Avatars and bots would also be hard-pressed to explain what else they might like to hear from a personality, a show, or a format. While it is almost always a mistake to put respondents in the role of program director, I cannot tell you how often a truly good idea emerges from a focus group of 10-12 listeners.

Avatars and bots would also be hard-pressed to explain what else they might like to hear from a personality, a show, or a format. While it is almost always a mistake to put respondents in the role of program director, I cannot tell you how often a truly good idea emerges from a focus group of 10-12 listeners.

So, AI research. What to say at this very early moment on its nascent growth curve?

We know there’s already something of a frenzy going on in this space, as startups beyond Evidenza are in the process of launching while venture capital is flying around to support these initiatives. I’m sure Evidenza felt pressure to go public with its initial plans, given the unbridled interest in synthetic research.

And let’s face it. We can’t be too far from Nielsen experimenting with AI-powered respondents which could eliminate many of the sampling, methodological, and other friction points that have developed and festered over the decades. Not to mention the time and expense that all parties now must bear.

But the entire purpose of ratings is not to measure behavior as a constant – like how do people rate “Stairway to Heaven?” Instead, the hierarchy of radio stations in a marketplace is dynamic. It changes over time, with the seasons, when your local sports team wins the championship, and when your morning guy picks up and runs to Boston.

No one loves paying for research. It’s a necessary evil in the strategic process that doesn’t always deliver an ROI. And it can be problematic on many fronts, especially given who conducts it and whether you are asking the right questions from the right people.

Turning it over to a bunch of bots could alleviate a litany of problems, not to mention time and expense. And we’d eat a lot fewer M&Ms and pepperoni pizzas.

But while we should always be on the lookout for technology-driven ways to answer the most pressing questions, we also have the obligation to make sure the bots don’t end up making things worse.

Thanks, AI, but we’ll take it from here.

There are times when we have to do the work.

If you’re not reading Chris Brunt’s newsletter, AI Edge, you’re missing insights for media decision-makers. Click here to read last week’s great issue, and to sign up for free.

Originally published by Jacobs Media